Cybersecurity & Data Privacy Capability Maturity Model (C|P-CMM)

There are many competing models that exist to demonstrate maturity. Given the available choices, the SCF decided to leverage an existing framework, rather than reinvent the wheel. In simple terms, we provided control-level criteria to an existing CMM model.

The C|P-CMM is meant to solve the problem of objectivity in both establishing and evaluating cybersecurity and privacy controls. There are four (4) main objectives for the C|P-CMM:

- Provide CISO/CPOs/CIOs with objective criteria that can be used to establish expectations for a cybersecurity & privacy program;

- Provide objective criteria for project teams so that secure practices are appropriately planned and budgeted for;

- Provide minimum criteria that can be used to evaluate third-party service provider controls; and

- Provide a means to perform due diligence of cybersecurity and privacy practices as part of Mergers & Acquisitions (M&A).

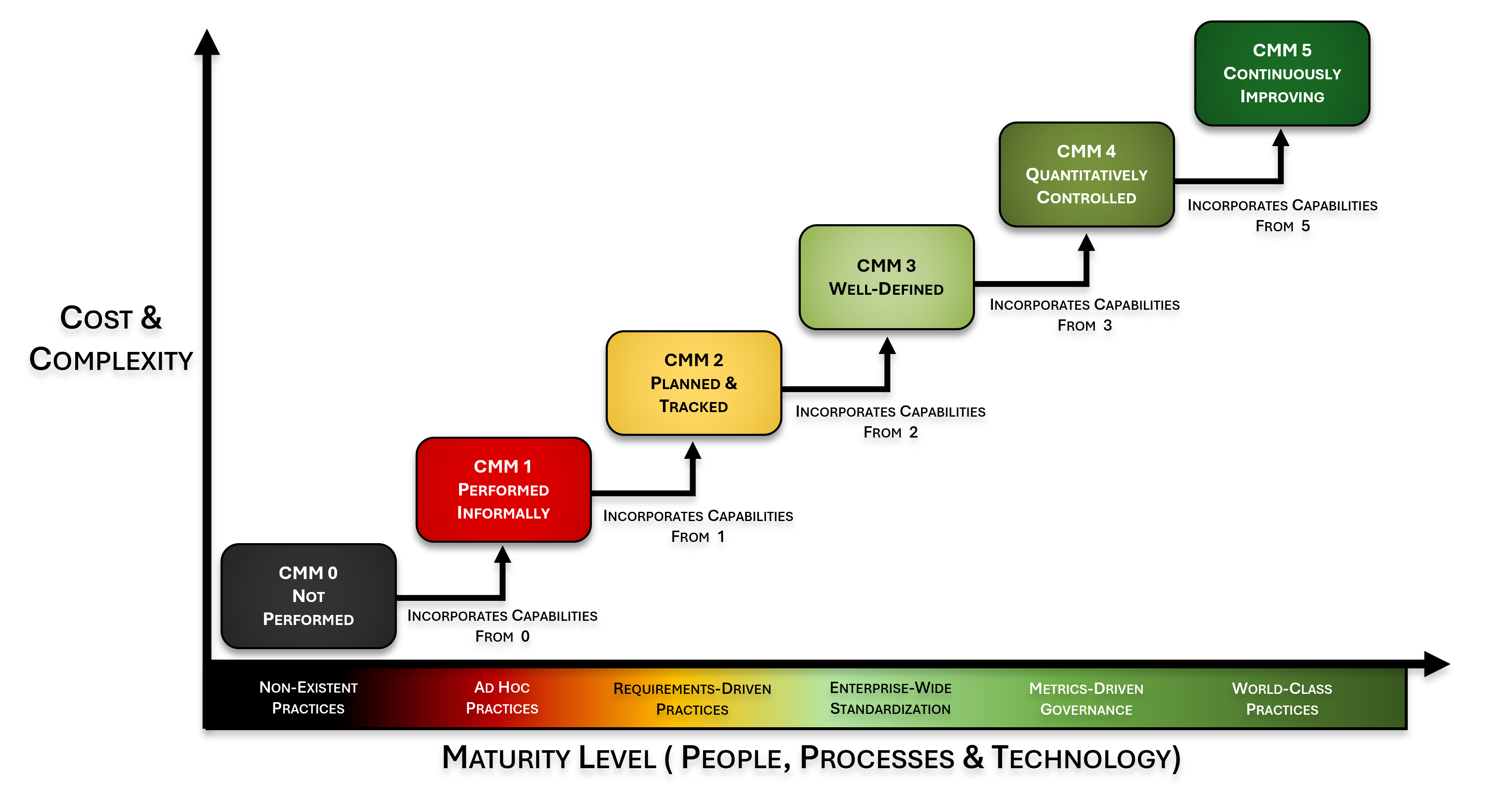

Nested Approach To Maturity

By using the term “nested” regarding maturity, we refer to how the C|P-CMM’s control criteria were written to acknowledge that each succeeding level of maturity is built upon its predecessor. Essentially, you cannot run without first learning how to walk. Likewise, you cannot walk without first learning how to crawl. This approach to defining cybersecurity & privacy control maturity is how the C|P-CMM is structured.

The C|P-CMM draws upon the high-level structure of the Systems Security Engineering Capability Maturity Model v2.0 (SSE-CMM), since we felt it was the best model to demonstrate varying levels of maturity for people, processes and technology at a control level. If you are unfamiliar with the SSE-CMM, it is well-worth your time to read through the SSE-CMM Model Description Document that is hosted by the US Defense Technical Information Center (DTIC).

Maintaining The Integrity of Maturity-Based Criteria

It is unfortunate that it must be explicitly stated, but a “maturity model” is entirely dependent upon the ethics and integrity of the individual(s) involved in the evaluation process. This issue is often rooted in the assessor’s perceived pressure that a control should be designated as being more mature than it is (e.g., dysfunctional management influence). Regardless of the reason, it must be emphasized that consciously designating a higher level of maturity (based on objective criteria) to make an organization appear more mature should be considered fraud. Fraud is a broad term that includes “false representations, dishonesty and deceit.”

This stance on fraudulent misrepresentations may appear harsh, but it accurately describes the situation. There is no room in cybersecurity and data protection operations for unethical parties, so the SCF Council published this guidance on what a “reasonable party perspective” should be. This provides objectivity to minimize the ability of unethical parties to abuse the intended use of the C|P-CMM.

Divining A Maturity Level Decision From Control-Level Maturity Criteria

The following two (2) questions should be kept in mind when evaluating the maturity of a control (or Assessment Objective (AO)).

- Do I have reasonable evidence to defend my analysis/decision?

- If there was an incident and I was deposed in a legal setting, can I justify my analysis/decision without perjuring myself?

Do you need to answer “yes” to every bullet pointed criteria under a level of maturity in the C|P-CMM? No. We recognize that every organization is different. Therefore, the maturity criteria items associated with SCF controls are to help establish what would reasonably exist for each level of maturity. Fundamentally, the decision comes down to assessor experience, professional competence and common sense.

Maturity (Governance) ≠ Assurance (Security)

While a more mature implementation of controls can equate to an increased level of security, higher maturity and higher assurance are not mutually inclusive. From a practical perspective, maturity is simply a measure of governance activities pertaining to a specific control or set of controls. Maturity does not equate to an in-depth analysis of the strength and depth of the control being evaluated (e.g., rigor).

According to NIST, assurance is “grounds for confidence that the set of intended security controls in an information system are effective in their application.” Increased rigor in control testing is what leads to increased assurance. Therefore, increased rigor and increased assurance are mutually inclusive.

The SCF Conformity Assessment Program (SCF CAP) leverages (3) three levels of rigor. The SCF CAP’s levels of rigor utilize maturity-based criteria to evaluate a control, since a maturity target can provide context for “what right looks like” at a particular organization:

- Level 1 (Basic) - Basic assessments provide a level of understanding of the security measures necessary for determining whether the safeguards are implemented and free of obvious errors.

- Level 2 (Focused) - Focused assessments provide a level of understanding of the security measures necessary for determining whether the safeguards are implemented and free of obvious / apparent errors and whether there are increased grounds for confidence that the safeguards are implemented correctly and operating as intended.

- Level 3 (Comprehensive) - Comprehensive assessments provide a level of understanding of the security measures necessary for determining whether the safeguards are implemented and free of obvious errors and whether there are further increased grounds for confidence that the safeguards are implemented correctly and operating as intended on an ongoing and consistent basis and that there is support for continuous improvement in the effectiveness of the safeguards.

C|P-CMM Levels

The C|P-CMM draws upon the high-level structure of the Systems Security Engineering Capability Maturity Model v2.0 (SSE-CMM), since we felt it was the best model to demonstrate varying levels of maturity for people, processes and technology at a control level. If you are unfamiliar with the SSE-CMM, it is well-worth your time to read through the SSE-CMM Overview Document that is hosted by the US Defense Technical Information Center (DTIC).

The six (6) C|P-CMM levels are:

- CMM 0 – Not Performed

- CMM 1 – Performed Informally

- CMM 2 – Planned & Tracked

- CMM 3 – Well-Defined

- CMM 4 – Quantitatively Controlled

- CMM 5 – Continuously Improving

C|P-CMM Level 0 (L0) – Not Performed

This level of maturity is defined as “non-existence practices,” where the control is not being performed:

- Practices are non-existent, where a reasonable person would conclude the control is not being performed.

- Evidence of due care and due diligence do not exist to demonstrate compliance with applicable statutory, regulatory and/or contractual obligations.

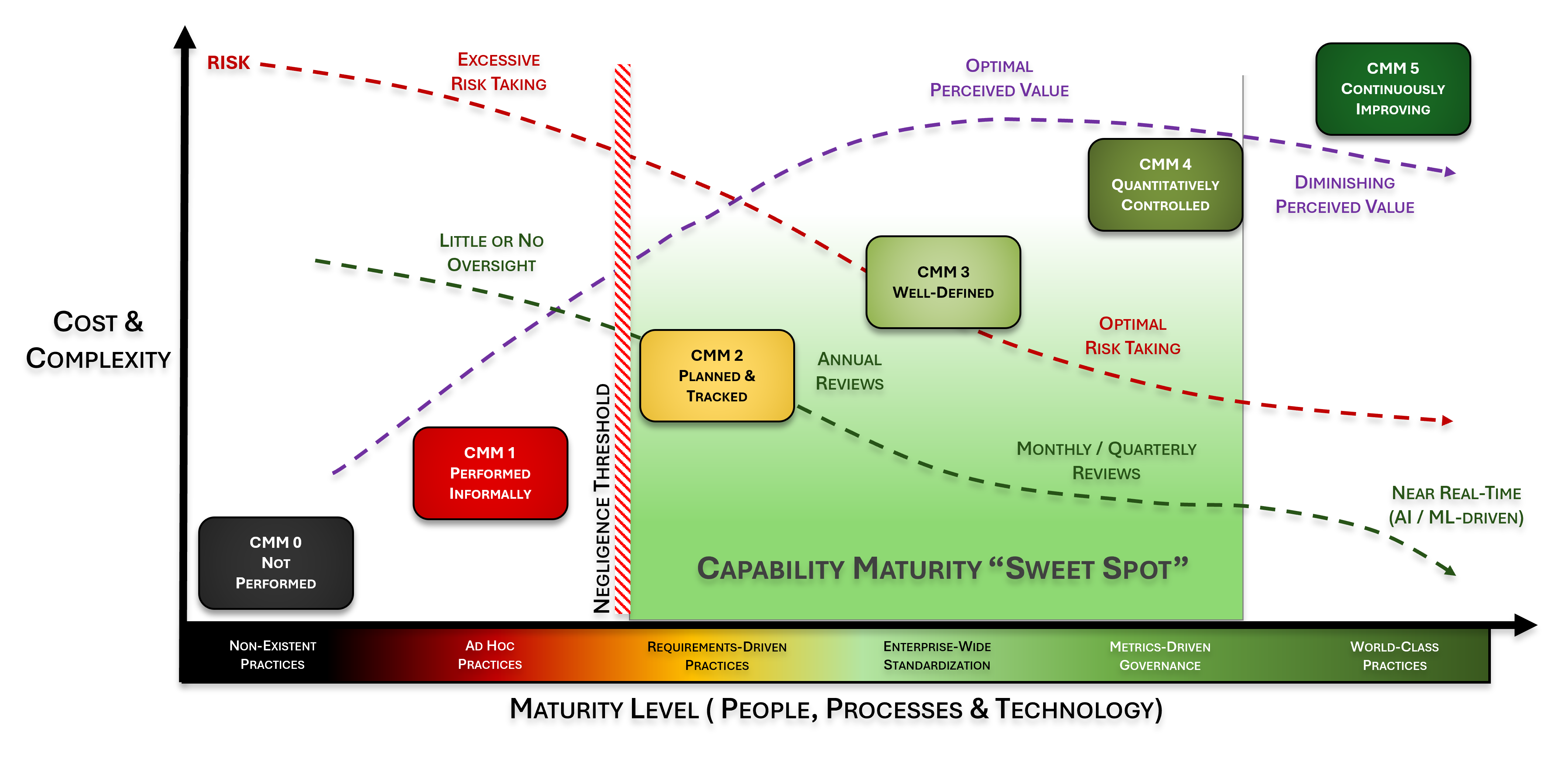

L0 practices, or a lack thereof, are generally considered to be negligent. The reason for this is if a control is reasonably-expected to exist, by not performing the control that is negligent behavior. The need for the control could be due to a law, regulation or contractual obligation (e.g., client contract or industry association requirement).

Note – The reality with a L0 level of maturity is often:

- For smaller organizations, the IT support role only focuses on “break / fix” work or the outsourced IT provider has a scope in its support contract that excludes the control through either oversight or ignorance of the client’s requirements.

- For medium / large organizations, there is IT and/or cybersecurity staff, but governance is functionally non-existent and the control is not performed through either oversight, ignorance or incompetence.

C|P-CMM Level 1 (L1) – Performed Informally

This level of maturity is defined as “ad hoc practices,” where the control is being performed, but lacks completeness & consistency:

- Practices are “ad hoc” where the intent of a control is not met due to a lack consistency and formality.

- When the control is met, it lacks consistency and formality (e.g., rudimentary practices are performed informally).

- A reasonable person would conclude the control is not consistently performed in a structured manner.

- Performance depends on specific knowledge and effort of the individual performing the task(s), where the performance of these practices is not proactively governed.

- Limited evidence of due care and due diligence exists, where it would be difficult to legitimately disprove a claim of negligence for how cybersecurity/privacy controls are implemented and maintained.

L1 practices are generally considered to be negligent. The reason for this is if a control is reasonably-expected to exist, by only implementing ad-hoc practices in performing the control that could be considered negligent behavior. The need for the control could be due to a law, regulation or contractual obligation (e.g., client contract or industry association requirement).

Note – The reality with a L1 level of maturity is often:

- For smaller organizations, the IT support role only focuses on “break / fix” work or the outsourced IT provider has a limited scope in its support contract.

- For medium / large organizations, there is IT and/or cybersecurity staff but there is no management focus to spend time or resources on the control.

C|P-CMM Level 2 (L2) – Planned & Tracked

Practices are “requirements-driven” where the intent of control is met in some circumstances, but not standardized across the entire organization:

- Practices are “requirements-driven” (e.g., specified by a law, regulation or contractual obligation) and are tailored to meet those specific compliance obligations (e.g., evidence of due diligence).

- Performance of a control is planned and tracked according to specified procedures and work products conform to specified standards (e.g., evidence of due care).

- Controls are implemented in some, but not all applicable circumstances/environments (e.g., specific enclaves, facilities or locations).

- A reasonable person would conclude controls are “compliance-focused” to meet a specific obligation, since the practices are applied at a local/regional level and are not standardized practices across the enterprise.

- Sufficient evidence of due care and due diligence exists to demonstrate compliance with specific statutory, regulatory and/or contractual obligations.

L2 practices are generally considered to be “audit ready” with an acceptable level of evidence to demonstrate due diligence and due care in the execution of the control. L2 practices are generally targeted on specific systems, networks, applications or processes that require the control to be performed for a compliance need (e.g., PCI DSS, HIPAA, CMMC, NIST 800-171, etc.).

It can be argued that L2 practices focus more on compliance over security. The reason for this is the scoping of L2 practices are narrowly-focused and are not enterprise-wide.

Note – The reality with a L2 level of maturity is often:

- For smaller organizations:

- IT staff have clear requirements to meet applicable compliance obligations or the outsourced IT provider is properly scoped in its support contract to address applicable compliance obligations.

- It is unlikely that there is a dedicated cybersecurity role and at best it is an additional duty for existing personnel.

- For medium / large organizations:

- IT staff have clear requirements to meet applicable compliance obligations.

- There is most likely a dedicated cybersecurity role or a small cybersecurity team.

C|P-CMM Level 3 (L3) – Well-Defined

This level of maturity is defined as “enterprise-wide standardization,” where the practices are well-defined and standardized across the organization:

- Practices are standardized “enterprise-wide” where the control is well-defined and standardized across the entire enterprise.

- Controls are implemented in all applicable circumstances/environments (deviations are documented and justified).

- Practices are performed according to a well-defined process using approved, tailored versions of standardized processes.

- Performance of a control is according to specified well-defined and standardized procedures.

- Control execution is planned and managed using an enterprise-wide, standardized methodology.

- A reasonable person would conclude controls are “security-focused” that address both mandatory and discretionary requirements. Compliance could reasonably be viewed as a “natural byproduct” of secure practices.

- Sufficient evidence of due care and due diligence exists to demonstrate compliance with specific statutory, regulatory and/or contractual obligations.

- The Chief Information Security Officer (CISO) , or similar function, develops a security-focused Concept of Operations (CONOPS) that documents organization-wide management, operational and technical measures to apply defense-in-depth techniques (note - in this context, a CONOPS is a verbal or graphic statement of intent and assumptions regarding operationalizing the identified tasks to achieve the CISO’s stated objectives. The result of the CONOPS is operating the organization’s cybersecurity and data protection program so that it meets business objectives). Control or domain-specific CONOPS may be incorporated as part of a broader operational plan for the cybersecurity and privacy program (e.g., cybersecurity-specific business plan)

It can be argued that L3 practices focus on security over compliance, where compliance is a natural byproduct of those secure practices. These are well-defined and properly-scoped practices that span the organization, regardless of the department or geographic considerations.

Note – The reality with a L3 level of maturity is often:

- For smaller organizations:

- There is a small IT staff that has clear requirements to meet applicable compliance obligations.

- There is a very competent leader (e.g., security manager / director) with solid cybersecurity experience who has the authority to direct resources to enact secure practices across the organization.

- For medium / large organizations:

- IT staff have clear requirements to implement standardized cybersecurity & privacy principles across the enterprise.

- In addition to the existence of a dedicated cybersecurity team, there are specialists (e.g., engineers, SOC analysts, GRC, privacy, etc.)

- There is a very competent leader (e.g., CISO) with solid cybersecurity experience who has the authority to direct resources to enact secure practices across the organization.

C|P-CMM Level 4 (L4) – Quantitatively Controlled

This level of maturity is defined as “metrics-driven practices,” where in addition to being well-defined and standardized practices across the organization, there are detailed metrics to enable governance oversight:

- Practices are “metrics-driven” and provide sufficient management insight (based on a quantitative understanding of process capabilities) to predict optimal performance, ensure continued operations, and identify areas for improvement.

- Practices build upon established L3 maturity criteria and have detailed metrics to enable governance oversight.

- Detailed measures of performance are collected and analyzed. This leads to a quantitative understanding of process capability and an improved ability to predict performance.

- Performance is objectively managed, and the quality of work products is quantitatively known.

L4 practices are generally considered to be “audit ready” with an acceptable level of evidence to demonstrate due diligence and due care in the execution of the control, as well as detailed metrics enable an objective oversight function. Metrics may be daily, weekly, monthly, quarterly, etc.

Note – The reality with a L4 level of maturity is often:

- For smaller organizations, it is unrealistic to attain this level of maturity.

- For medium / large organizations:

- IT staff have clear requirements to implement standardized cybersecurity & privacy principles across the enterprise.

- In addition to the existence of a dedicated cybersecurity team, there are specialists (e.g., engineers, SOC analysts, GRC, privacy, etc.)

- There is a very competent leader (e.g., CISO) with solid cybersecurity experience who has the authority to direct resources to enact secure practices across the organization.

- Business stakeholders are made aware of the status of the cybersecurity and privacy program (e.g., quarterly business reviews to the CIO/CEO/board of directors). This situational awareness is made possible through detailed metrics.

C|P-CMM Level 5 (L5) – Continuously Improving

This level of maturity is defined as “world-class practices,” where the practices are not only well-defined and standardized across the organization, as well as having detailed metrics, but the process is continuously improving:

- Practices are “world-class” capabilities that leverage predictive analysis.

- Practices build upon established L4 maturity criteria and are time-sensitive to support operational efficiency, which likely includes automated actions through machine learning or Artificial Intelligence (AI).

- Quantitative performance goals (targets) for process effectiveness and efficiency are established, based on the business goals of the organization.

- Process improvements are implemented according to “continuous improvement” practices to affect process changes.

L5 practices are generally considered to be “audit ready” with an acceptable level of evidence to demonstrate due diligence and due care in the execution of the control and incorporates a capability to continuously improve the process. Interestingly, this is where Artificial Intelligence (AI) and Machine Learning (ML) would exist, since AI/ML would focus on evaluating performance and making continuous adjustments to improve the process. However, AI/ML are not required to be L5.

Note – The reality with a L5 level of maturity is often:

- For small and medium-sized organizations, it is unrealistic to attain this level of maturity.

- For large organizations:

- IT staff have clear requirements to implement standardized cybersecurity & privacy principles across the enterprise.

- In addition to the existence of a dedicated cybersecurity team, there are specialists (e.g., engineers, SOC analysts, GRC, privacy, etc.)

- There is a very competent leader (e.g., CISO) with solid cybersecurity experience who has the authority to direct resources to enact secure practices across the organization.

- Business stakeholders are made aware of the status of the cybersecurity and privacy program (e.g., quarterly business reviews to the CIO/CEO/board of directors). This situational awareness is made possible through detailed metrics.

- The organization has a very aggressive business model that requires not only IT, but its cybersecurity and privacy practices, to be innovative to the point of leading the industry in how its products and services are designed, built or delivered.

- The organization invests heavily into developing AI/ML technologies to make near real-time process improvements to support the goal of being an industry leader.